Garbage In, Garbage Out: The High Cost of Managing Healthcare Data Quality

Many standardized data coding sets exist to help capture and communicate a person’s care data across and between health systems. Several organizations including the Regenstrief Institute, the World Health Organization, and Centers for Medicare and Medicaid have all done their part — through the establishment of standards like LOINC, ICD-10 and HCPCS respectively — to ensure that healthcare-focused dialog would not become a “tower of babel” in data capture and communications.

Adding another layer of needed structure, the Health Level Seven International (HL7) organization continues to champion standardization. They have provided necessary additional scaffolding for healthcare message structure and exchange to enable their vision of “a world in which everyone can securely access and use the right health data when and where they need it.” 1

Despite this progress toward interoperability, a major challenge to data quality exists upstream of these efforts: the drive to get the right information captured and correctly standardized before sharing the data and/or analyzing it for care and performance insights.

However, these analyses can be compromised when complex operations yield data that is incomplete, duplicative, inaccurate, or organized with non-standard code sets. There are often notable errors and missing data. A recent JAMA Network Open study reported that when patients were asked to review their medical records for mistakes, the most common serious errors were related to current or past diagnoses (27.5%), inaccurate medical histories (23.9%), and medications or allergies (14.0%).2

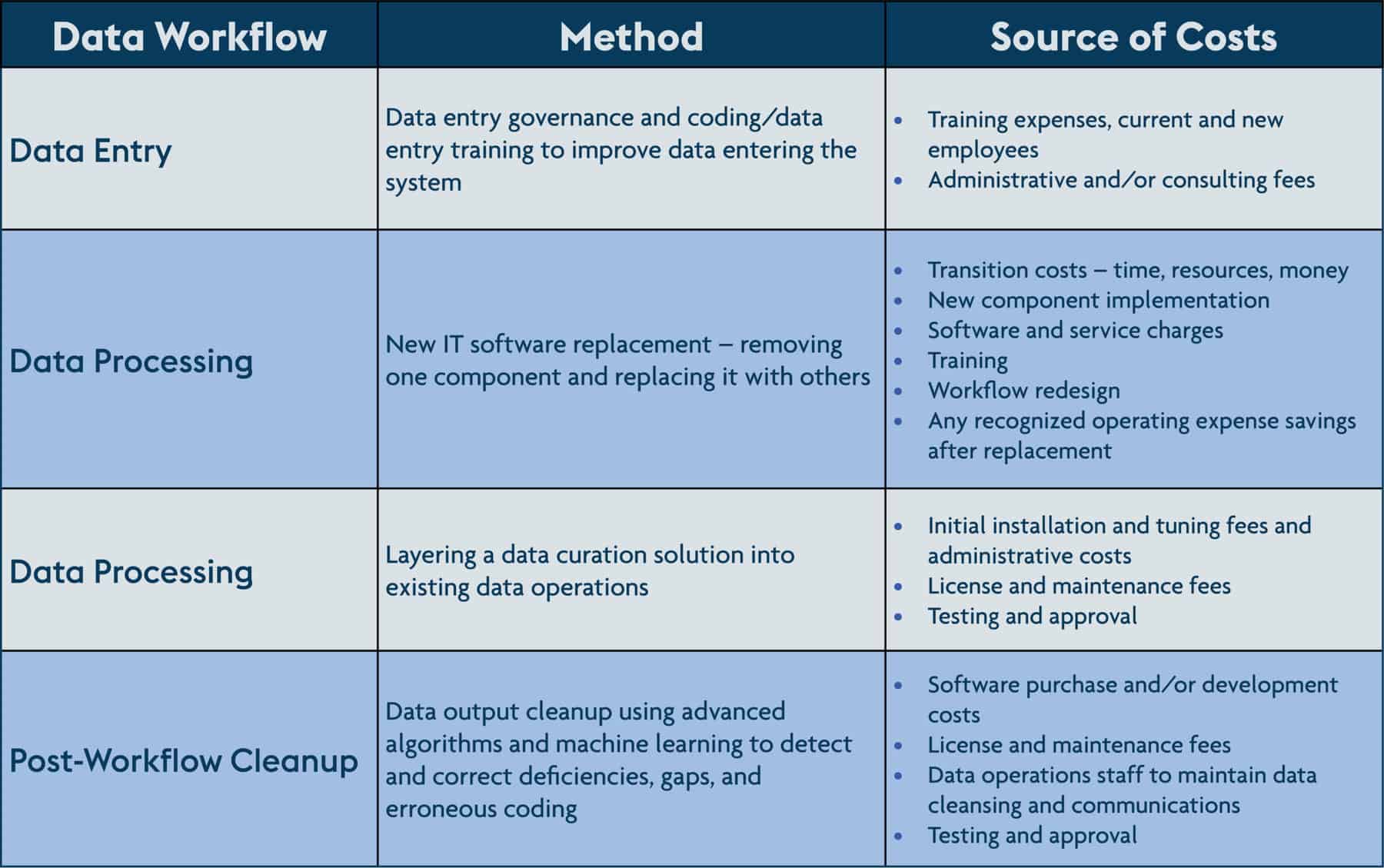

The “garbage in, garbage out” dynamic increases labor and software costs to fix missing, duplicative, or inaccurate data, since poor quality data can hinder decision making. Organizations looking to improve data quality are faced with several avenues to ensure cleaner data for analysis. Some options are more resource intensive than others:

Each of these approaches has pros and cons related to resource allocation, time to implement and maintain, and expected outcomes. One approach to minimize cost is to layer a data curation service onto existing data operations. This approach cleans and standardizes data alongside the data processing workflow. The benefits are two-fold; the first is that these solutions can fit within current operations as-is. The second is that by detecting and fixing data gaps and deficiencies, a data curation solution can significantly improve downstream data quality for analysis and use cases.

While each approach can mediate the impact of the “garbage in” quality dilemma to some degree, the data curation option builds upon the existing and evolving standards of organization such as HL7 without major disruptions to staff and other resources. To learn more about the different avenues to improving data quality, click here to read the white paper The Demand for Quality Insights and the Promise of Data Curation Services.

1 HL7 International. About HL7. Accessed 3/28/22. http://www.hl7.org/about/index.cfm?ref=nav

2 Bell SK et al. Frequency and types of patient-reported errors in electronic health record ambulatory care notes. JAMA Network Open. 2020;3(6):e205867.